Insight

Defeating motor neuron disease: Scott-Morgan’s mission to become the first human cyborg

After being diagnosed with terminal MND in 2017, Dr Peter Scott-Morgan was determined to both survive and thrive with the help of cutting-edge technology. To do this, Scott-Morgan enlisted the help of technology companies to turn him into the world’s first human cyborg, as well as demonstrate the ability of technology to transform the lives of anyone with disabilities. Allie Nawrat finds out more.

I

n 2017, scientist and robotics expert Dr Peter Scott-Morgan was diagnosed with motor neurone disease (MND), the same degenerative disease that affected theoretical physicist Professor Stephen Hawking.

MND causes messages from a person’s neurones to gradually stop reaching the muscles, which in turn begin to weaken and waste away – a process that eventually results in paralysis. However, as the condition does not affect the patient’s mental acuity, it is common for MND sufferers to be locked into their paralysed bodies with no way to communicate.

At the time of his diagnosis, Scott-Morgan was given two years to live. However, he was determined to not only survive this debilitating illness but thrive with the help of revolutionary technology. By working with an unprecedented consortium of technology companies, Scott-Morgan wants to transform himself into the world’s first human cyborg by augmenting himself with artificial intelligence (AI) and robotics. This means that once Scott-Morgan is locked in, he will still be able to communicate and have a high quality of life.

On a mission to thrive as well as survive

The subject of a recent Channel 4documentary, Scott-Morgan’s vision to become the world’s first human cyborg has seven pillars, which are overseen and integrated by DXC.

Two of these involve surgeries to keep him alive as his muscles weaken and he becomes locked in. The first of these (performed in July 2018) was an integrated triple-ostomy to allow him to continue to eat and go to the toilet in a dignified manner. This is not a standard procedure for MND patients and was requested by Scott-Morgan to improve his future quality of life.

Scott-Morgan’s vision to become the world’s first human cyborg has seven pillars.

The second surgery was a laryngectomy, which, similarly, is not usually offered to MND patients, and involves the surgical removal of the larynx with the aim of preventing saliva entering the lungs, which causes pneumonia.

Scott-Morgan underwent this elective surgery in the winter of 2018. This operation left him without the ability to speak, but Scott-Morgan was aware his MND would lead to this outcome anyway.

CereProc and Scott-Morgan’s synthetic voice

DXC director of AI Jerry Overton, who has worked closely with Scott-Morgan on this project, explains that being able to express yourself in your own voice is viewed by Scott-Morgan as fundamental to being human. This is why Scott-Morgan, in advance of losing his voice, began exploring how to achieve pillar three of his vision: a synthetic voice that sounded like him.

He was disappointed by the technology he found, until in mid-2018, he looked into CereProc, which had worked with US film reviewer Roger Ebert to clone his voice after a similar surgery. CereProc chief scientific officer Dr Matthew Aylett explains the company is committed to building voices that express personality and emotion by leveraging different styles of speech.

Across more than 15 hours of recording sessions, Scott-Morgan recorded more than 1,000 individual phases.

The company worked with Scott-Morgan to record a huge amount of audio; across more than 15 hours of recording sessions, he recorded more than 1,000 individual phases in different voice styles.

“We produced an intimate voice style, an enthusiastic, presentational voice style, a neutral voice style and a conversational voice style” for Scott-Morgan, notes Aylett.

Scott-Morgan’s voice was then built using neural text-to-speech (TTS) software. Aylett explains that this approach allows for voices to be built with much less data than previous unit selection software, while retaining a high-quality outcome. Neural TTS allowed CereProc to build a very smooth and naturally sounding voice for Scott-Morgan.

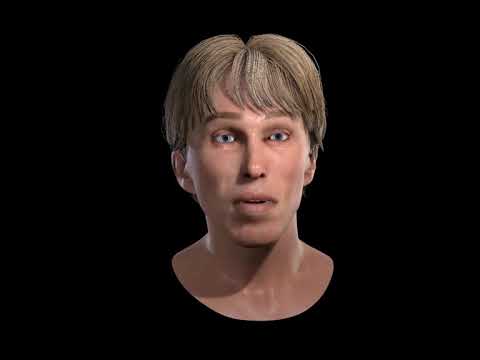

As he would eventually lose the ability to control his facial expressions, Scott-Morgan wanted to have a 3D animated avatar of himself attached to his wheelchair that would appear to be speaking. This fourth pillar of the vision has been developed by California-based Embody Digital and can be visualised here.

The company worked with Scott-Morgan to record a huge amount of audio; across more than 15 hours of recording sessions, he recorded more than 1,000 individual phases in different voice styles.

“We produced an intimate voice style, an enthusiastic, presentational voice style, a neutral voice style and a conversational voice style” for Scott-Morgan, notes Aylett.

Scott-Morgan’s voice was then built using neural text-to-speech (TTS) software. Aylett explains that this approach allows for voices to be built with much less data than previous unit selection software, while retaining a high-quality outcome. Although the first voice CereProc built using neural TTS was very smooth, it did not sound like Scott-Morgan. However, as the technology has advanced, Aylett notes that CereProc has now produced a natural sounding voice for him.

As he would eventually lose the ability to control his facial expressions, Scott-Morgan wanted to have a 3D animated avatar of himself attached to his wheelchair that would appear to be speaking. This fourth pillar of the vision has been developed by California-based Embody Digital and can be visualised here.

Enter Intel with ACAT and word prediction

Now that Scott-Morgan has a synthetic voice, the next challenge was enabling him to use this voice to actually interact in the real world. This is where Intel and its director of the anticipatory computing lab, Lama Nachman, became involved.

After being contacted by Scott-Morgan, Intel leveraged and added significant functionality to its Assistive Context-Aware Toolkit (ACAT) - which it built for Professor Hawking - to look at reducing the silence gap in conversations, explains Nachman.

Intel developed gaze tracking for ACAT to allow Scott-Morgan the facility to use his eyes to type out the letters of words that he wants to say. Nachman explains that ACAT leverages a gaze tracker developed by Tobii to do this.

He only needs to type around 10% of all the letters before the word predictor kicks in.

Although gaze control is a lot faster than the cheek trigger approach used by Hawking and would reduce the silence gap slightly, Nachman was interested in exploring how AI could further increase Scott-Morgan’s verbal spontaneity.

Intel therefore developed an AI-enabled tailored word predictor that brings up words based on what Scott-Morgan types using his gaze. As a result, “he only needs to type around 10% of all the letters before the word predictor kicks in and figures out what it is that he wants to say,” explains Nachman.

Now Intel is working on an even more advanced system that would speed things up further. In this system, the AI listens to Scott-Morgan’s conversations and generates words based on what it has heard and how Scott-Morgan has previously responded in similar scenarios.

If what the system brings up is not quite what he wants, Scott-Morgan will also be able to type out his preferred response. Nachman notes that Intel is hoping to have this human-AI integrated word predictor deployed within a year.

The final two pillars: an autonomous wheelchair and exoskeleton

Being able to speak in your own voice is not the only fundamental element to being human for Scott Morgan; moving around autonomously is another important area and something that he will be unable to do as the muscles in his hands, which he uses to control his wheelchair, waste away.

To resolve this challenge, DXC is developing a self-driving wheelchair for Scott-Morgan. Overton is hopeful that pillar six, a fully autonomous version based on voice commands (such as ‘take me to kitchen’), will be deployed in the middle of 2021.

DXC is further working with Permobil to enable Scott-Morgan’s wheelchair to act as an exoskeleton to support him to stand up or lie down.

Scott-Morgan’s Cyborg Harness And Robotic Locked-In Exoskeleton (or Charlie) is also likely to be fully ready for Scott-Morgan next year, according to Overton.

DXC and the cyborg universe

The ultimate aim is for Scott-Morgan to be able to control all five technological capabilities – his synthetic voice, avatar, word predictor, self-driving vehicle and exoskeleton - through a core user interface developed by DXC. This ‘cyborg universe’ relies on Microsoft’s mixed reality head-set HoloLens.

Although CereProc’s speech synthesis, the avatar and DXC’s self-driving vehicle work in the cyborg universe, the capabilities Intel has built into ACAT for word prediction and gaze control are not yet compatible.

Therefore, DXC and Intel are working to find a way for them to be integrated or work side by side so Scott-Morgan can use the same interface for both and can “leverage muscle memory” to keep up his impressive gaze control speed, explains Nachman.

Beyond Peter 2.0

Importantly, for Scott-Morgan, this cyborg project is bigger than him. Through his foundation, Scott-Morgan hopes this technology can help to revolutionise the future for all disabled people.

To this end, he has pushed for a large proportion of the technology to be available open source.

This is something that Overton is particularly committed to. He notes: “We try to look for and use the most available, accessible, open and affordable hardware possible.”

Overton also explains that DXC is launching an open source programme with the foundation where anyone will be able to download and use software associated with the Peter 2.0 project, and then add value on top.

Frankly this is a community that gets left out time and time again.

Intel is also committed to open source principles for ACAT; “That is something we want to enable the whole community with and frankly this is a community that gets left out time and time again,” concludes Nachman.

Although Aylett notes that CereProc’s bespoke voice for Scott-Morgan will not be available open source, he states that individuals can build voices with CereProc and then plug and play that into the other open source elements.

Aylett concludes that Scott-Morgan’s experience really demonstrates the power of technology to resolve significant medical challenges. It will act as proof that this can be done and empower “people with severe disabilities and with communication difficulties to say I am not happy with that - I want something better”.

Main image: Dr Peter Scott-Morgan. Credit (all images): Channel 4